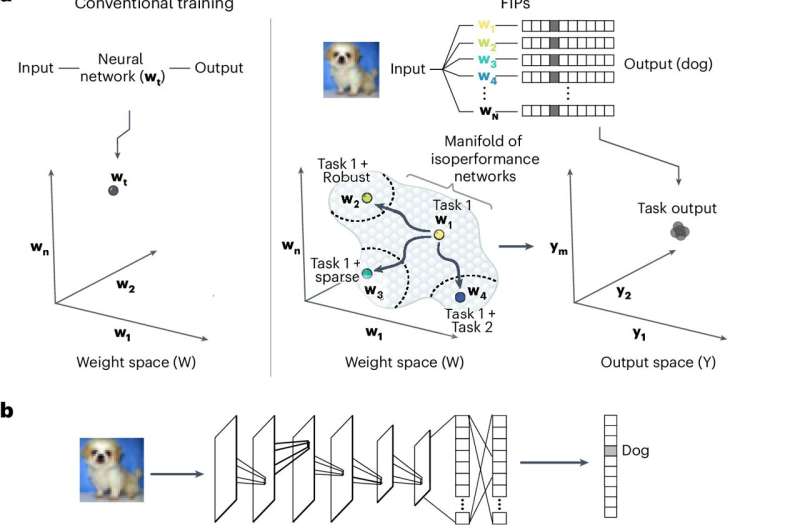

Researchers at Caltech have developed an innovative algorithm, inspired by the flexibility of the human brain, that enables neural networks to continuously learn new tasks without forgetting previous knowledge. This breakthrough, known as the Functionally Invariant Path (FIP) algorithm, has wide-ranging applications from improving online recommendations to fine-tuning self-driving cars. The algorithm’s ability to overcome ‘catastrophic forgetting’ in AI systems represents a significant step forward in the quest for more adaptable and robust machine learning models. Neural networks and artificial intelligence are poised to transform various industries, and this innovation could pave the way for more flexible and responsive AI solutions.

Defeating The Curse of Catastrophic Forgetting

Neural networks can learn to do things such as recognise hand written digits, astonishing feats of which no previous computer is capable. However, these models often suffer from what is referred to as ‘catastrophic forgetting’, where they forget how to perform old tasks after being trained on new ones. The flexibility as seen in the brains of humans, and also most animals within artificial neural networks that can only learn how to do a specific task or activity very well.

Citing neuroscience work on the brain’s re-wiring ability to learn new skills after injury, the Caltech team set about designing an algorithm that would allow a neural network to remember what it has already learned as it learns more. The end product is the Functionally Invariant Path (FIP) algorithm, a breakthrough technology which could revolutionize machine learning and artificial intelligence as we know it.

Abstract Bridging the Gap Between Artificial and Biological Neural Networks

The FIP algorithm was created by a team of Caltech researchers, including Matt Thomson, assistant professor of computational biology and HMRI Investigator. They were inspired by the work of Carlos Lois, a Research Professor of Biology at Caltech who can train birds to relearn prior communicative behavior.

Humans, too, have shown remarkable power to create neural rerouting around everyday tasks they find new ways of performing after stroke-induced brain damage. To allow neural networks to be updated with new data without forgetting old stimuli, the FIP algorithm was created to have this same flexibility. But the researchers manage to do so by using a mathematical technique they call differential geometry, which provides a way of modifying neural networks without bringing about catastrophic forgetting.

Application of the FIP Algorithm

The relevance of the FIP algorithm reaches much more than just academic purposes. The team that included former graduate student Guru Raghavan, Ph. Although the new technology is still in its early stages of development, Sherif Elsayed-Ali, Ph.

Example of such usage might be enhancement of recommendation engine in an online store. Since they rely on learning from the behavior of users, and understand that this behavior will inevitably change over time, the recommendations can become more personalized and relevant as their underlying neural networks continue to learn again without a fundamental system redesign.

Another exciting use is fine-tuning self-driving car systems. If an autonomous vehicle finds itself in a new environment with slightly different rules of the road, the FIP algorithm allows it to adapt and learn without tinkering the self-driving tech that enabled it to read traffic signals or handle complex intersections.StretchImage Newsienceshoot synd/ap/exutterstock

Guided by Caltech Entrepreneur In Residence Julie Schoenfeld, Raghavan and Thomson have co-founded a company called Yurts to continue the expansion of the FIP algorithm and bring machine learning systems to the masses. The company is focused on democratizing this revolutionary technology across a range of industries, ushering in the era of flexible and robust artificial intelligence.