Researchers at USC Viterbi School of Engineering Information Sciences Institute (ISI) are investigating whether advanced AI models can perform nonverbal abstract reasoning, a task once reserved for the human mind. This AI research could pave the way for machines that not only understand but also reason, blurring the line between machine intelligence and human cognition.

Revealing the Cognition in AI

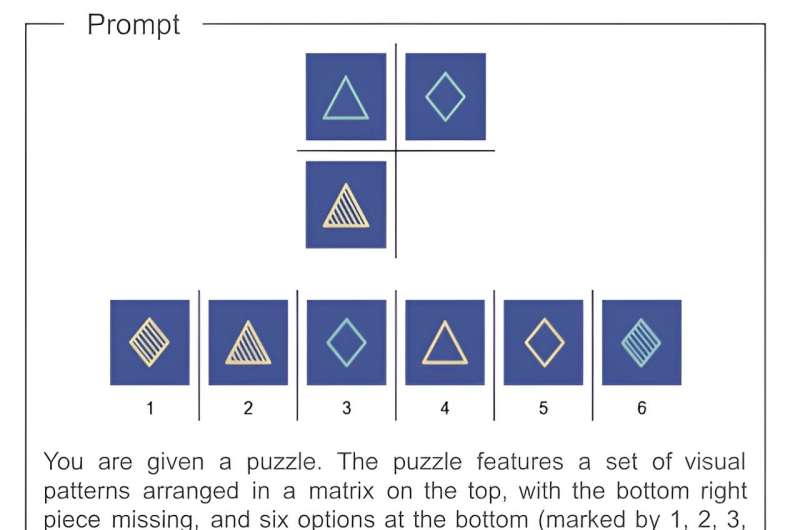

AI has proven it can master language, art generation and even chess. The newest frontier is abstract reasoning: those confounded visual puzzles that until now have been the exclusive domain of the human mind. USC Viterbi School of Engineering’s Information Sciences Institute (ISI) researchers sought to find out by pitting the cognitive prowess of AI against one of humanity’s most elusive, yet defining, traits—nonverbal abstract reasoning—and put the latest multi-modal large language models (MLLMs) to work.

The team — which included Research Assistants Kian Ahrabian and Zhivar Sourati — recently presented the details at the Conference on Language Modeling (COLM 2024) in Philadelphia. The study, published in Chem yesterday and posted on the arXiv preprint server, highlights both the present constraints and future promise of AI reasoning.

Extending the limits of AI reasoning

In this work, the researchers developed a new dataset to evaluate MLLMs on reasoning: puzzles from Raven’s Progressive Matrices (RPM) which is one of the most established tests of abstract reasoning. They tested 24 different MLLMs cratewdxariance.com Curiously, the open-source models failed miserably on both fronts — they found it challenging to infer visual patterns with logical reasoning. Nevertheless, the closed-source models (like GPT-4V) by private companies still outperformed it.

“Even for closed-source models, we saw some nontrivial results,” Ahrabian said. GPT-4V was quite good at doing reasoning specifically, but it did error_results is far from perfect. Such heterogeneity underscores the need for more sophisticated tools in AI training (more data, more compute) to address multitask functions of cognitive dimension better.

Tallying the Roadblocks of AI Reasoning

An important part of the investigation involved figuring out where and how these models were breaking down. What the researchers found out was that the problem was not only in the visual processing, it was in cognition itself. The exact of how detailed the textual description were about the images was taken even down to fine-scaled levels, despite all assuming vcFind had access to this very same information in another format and working from that assumption (Most models still averaged between correct reasoning on 0–2 examples).

Researchers discovered this and went on to look at a useful strategy called Chain of Thought prompting, in which the AI is led through its reasoning sequentially. Sometimes this gave some race results which we improved with full 100% in performance.

There is still a lot of work to be done but the researchers are confident. These results point to the current weaknesses of AI as well as what we have to look forward to in years down the road. These models are still in development, but as they improve, the work of USC researchers could eventually result in AI that not just understands but reasons — raising new questions about when artificial intelligence ends and human thinking begins.