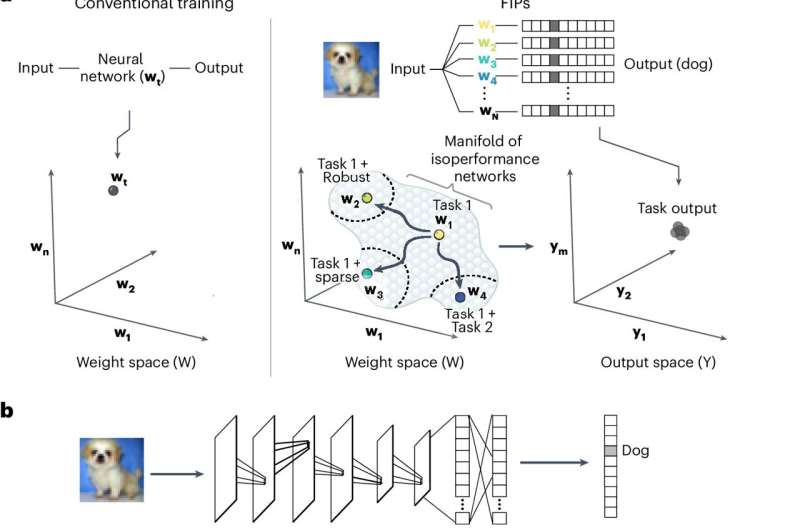

Researchers at the California Institute of Technology (Caltech) have developed a novel algorithm, called the Functionally Invariant Path (FIP) algorithm, that enables neural networks to continuously learn new tasks without ‘forgetting’ previous knowledge. This breakthrough, inspired by the flexibility of the human brain, has wide-ranging applications from e-commerce recommendations to self-driving car technology. The algorithm utilizes differential geometry to modify neural networks without losing previously encoded information, addressing the longstanding challenge of ‘catastrophic forgetting’ in artificial intelligence.

Overcoming the Limitations of Traditional Neural Networks

Neural networks have become a cornerstone of modern artificial intelligence, excelling at tasks such as image recognition and natural language processing. However, these models often struggle with a phenomenon known as ‘catastrophic forgetting’. When a neural network is trained on a new task, it can successfully learn the new assignment but at the cost of forgetting how to complete the original task.

This limitation poses significant challenges for applications that require continuous learning, such as self-driving cars. Traditionally, these models would need to be fully reprogrammed to acquire new capabilities, which is both time-consuming and resource-intensive. In contrast, the human brain is remarkably flexible, allowing us to easily learn new skills without losing our previous knowledge and abilities.

The Inspiration from Neuroscience and Biological Flexibility

Inspired by the remarkable flexibility of the human and animal brain, the Caltech researchers set out to develop a new algorithm that could overcome the limitations of traditional neural networks. They were particularly intrigued by the work of Carlos Lois, a Research Professor of Biology at Caltech, who studies how birds can rewire their brains to learn how to sing again after a brain injury.

This observation, along with the understanding that humans can forge new neural connections to relearn everyday functions after a stroke, provided the inspiration for the Caltech team to create the Functionally Invariant Path (FIP) algorithm. The algorithm, developed by Matt Thomson, Assistant Professor of Computational Biology, and former graduate student Guru Raghavan, Ph.D., utilizes a mathematical technique called differential geometry to allow neural networks to be modified without losing previously encoded information.

Empowering Continuous Learning in Artificial Neural Networks

The FIP algorithm represents a significant advancement in the field of machine learning, as it enables neural networks to be continuously updated with new data without having to start from scratch. This capability has far-reaching implications, from improving recommendations on online stores to fine-tuning self-driving cars.

In 2022, with guidance from Julie Schoenfeld, Caltech Entrepreneur In Residence, Raghavan and Thomson founded a company called Yurts to further develop the FIP algorithm and deploy machine learning systems at scale to address a wide range of problems. The algorithm’s versatility and ability to overcome the challenge of ‘catastrophic forgetting’ have the potential to revolutionize how artificial intelligence systems are designed and deployed, paving the way for more flexible and adaptable technologies.